Having dipped my toes in to 10GHz EME with an existing antenna rotator I used for VHF/UHF LEO satellites I was faced with the need to upgrade my setup if I wanted to do it more reliably.

It was possible to make contacts with my existing setup, but the instability of it on a thin aluminium pole that moved like a pendulum in wind along with an inaccurate (for microwave use) rotator with a high amount of backlash and tracking errors were were a real annoyance. The rotator wasn’t meant for this sort of use so I am still happy it seen me off to a good start on 10GHz EME without a significant initial expense that may have put me off trying.

There did not appear to be many commercial amateur radio rotator options at 0.1 degree accuracy with low backlash that I would want for my current, or larger dish, and those that might be suitable came with an eye watering price tag. So I made the decision to try to DIY it, mostly copied from people smarter than me with very few original thoughts.

I later noticed all a commercial option using slew drives from RF Hamdesign but I had the drive and parts here by that point so couldn’t take the easy buy my way out of the problem option.

Dual Axis Slew Drive

Having seen discussion on the moon-net reflector about options, I settled on using a dual axis slew drive rotator which seems to be a very popular choice. These seem to be mainly provided for use rotating solar arrays so they are fairly common devices, are not expensive and do the trick for radio use very well. It will also be suitable should I upgrade to a larger dish in the future.

The rotator was ordered from CoreSun drive and I settled on the SVH3-62B-RC-24H3033-REV.A as described at: http://www.coresundrive.com/en/product/Solar_Tracking/2019/0729/93.html

In its standard form this is slow for general amateur radio use, requiring a full 21 minutes for a 360 degree rotation, not handy for contests or LEO satellites requiring quick repositioning. They can however be ordered with different gearing to allow faster movement but as I’m using this for the slow moving moon I stuck with the standard option.

After payment was made, air freight took about a week to deliver the small but heavy crate.

Dual Axis Slew Drive Documentation Errors

One thing that was a bit confusing was the wiring colours on the document supplied with the SVH3 did not match the wiring colours on the SVH3 I received. After some testing found them to be as follows:

Wire Definition

Pin 1 (black) = motor –

Pin 2 (red) = motor + 24V

Pin 3 (grey) = hall GND (marked yellow on document)

Pin 4 (brown) = hall + (5-12V)

Pin 5 (blue) = hall A

Pin 6 (orange) = hall B (marked green on document)

Pin E (green) = GND (marked grey on document)

Additionally, the gear ratios on the document supplied did not match that marked on the drives that arrived which took me a while to notice.

Physical Mounting – Dish

One of the successful setups I had seen online and liked the look of was that of N5TM and chose to implement a similar mount for the dish with two plates bolted on to the size of the rotator. He describes the mounting setup and provides drawings of what he’s used top and bottom on his website here: http://n5tm.com/index.php/2023/10/30/brackets/

By printing the template to scale and centre punching I was able to mark the positioning of the 6x11mm holes of the rotator on the aluminium arm to check and drill. I chose not to trim the corners of the mounting arms off at this stage as they might be useful for fitting some physical stops later.

The Andrews 120cm dish has four mounting holes in a 14cm rectangle so I chose to use a solid aluminium plate affixed to the two arms with some aluminium angle.

The end result with the coach bolts that will attach it to the dish.

One mistake I made was with the length of the support arms, I reduced them in size a little from the diagrams I was working from purely due to delivery costs on the aluminium jumping up significantly thinking a small change wouldn’t matter with a small dish. Well it does. As a result I can only go down to 5 degrees elevation before making contact with the mounting pole. As I don’t have terrestrial microware possibilities here, and my moon horizon is minimum 8 degrees at 10GHz, it’s not a problem for now.

Rotator Controller – K3NG

I’d seen some commercial controllers mentioned along with some peoples very nice looking DIY setups and settled on the open source K3NG rotator option. This offers a lot of options for customisation, and is well documented.

I decided to use a Nextion touch screen display like I’ve used in other projects using VK4GHZ’s awesome code for the rotator available at https://vk4ghz.com/vk4ghz-nextion-for-k3ng-rotator-controller/ and not have any buttons to simplify things a little. The schematic from N5TM available at github helped me get my head around what was needed quickly.

I initially acquired a very cheap second hand 24v Meanwell PSU to use for the rotator, which turned out to be terrible RF interference wise on HF. Buy cheap buy twice.

I also used an RTC on the Arduino in order to allow the K3NG software to track the moon without needing a computer doing the tracking. This all came together in a big mess on the desk but both axis rotated as expected.

One thing I found was that the K3NG would not work with the INCREMENTAL_ENCODER option as I assumed it might as it requires a Z from the encoders. Patching the requirement out like someone else did made it kind of work but introduced some really odd problems I couldn’t get to the bottom of.

Using PULSE_INPUT with one line seemed to work okay and others have had success with this. Unfortunately it turned out I wasn’t to be one of them.

I designed a case with a sliding lid to house the Arduino, Nextion display, optoisolator, relay board, buck converter and connections and 3D printed it. In the unlikely event someone wants this, the STL is available here.

The Arduino would blip the relays on power up and I couldn’t figure out a way to stop this. I couldn’t detect the rotator moving during the short blips but decided not to chance it and settled on a small relay board with a timer to delay powering the relay board up until after the Arduino had booted.

Cabling

I picked a small waterproof enclosure with mounting wings that allowed it to be cable tied to the motors and affixed a terminal block inside this along with 4 holes and waterproof cable glands. I will attach it to the support pole when fully set up.

I decided to use two separate cables for the controller, one carrying the pulse signals, the other power to reduce the risk of pulse signals being messed up, not sure if this actually helped or not. I understand many upgrade the rotator to have absolute encoders for accurate position feedback, or add external elevation detection but I’m going to run with it as is now and I don’t foresee it being too much of a problem with a 1.2m dish. (I was wrong)

Physical Mounting – Base

My setup needs to sit above a flat roof of a garage in order to get over the top of nearby vegetation and my house. Being on a flat roof accessed by a window in the house allows easy access to the setup. In order to have a vertical pole mounted on TK brackets with the pole going to the ground to support the weight, it needs to be 3.8m long with 1.8m of this above the top bracket. The aluminium pole I had been using was not sturdy enough to support the rotator and dish above and had an alarming amount of movement in even moderate winds.

I settled on 10cm steel box section to give me something that wouldn’t move. On asking g4ytl about how he mounted his dish I seen he had used similar box section with metal plates on top, one welded to the box section, the other plate bolted in to the rotator with both connected using bolts. There were a number of variations of this setup online and I liked the simplicity of it so went this route.

I asked a local metal fabrication shop to drill and weld the plates and was pleasantly surprised as it ended up costing not much more than the metal itself would have cost me. I did however make a mistake with the wall size at 6mm with 10mm plate as it made the section ridiculously heavy requiring me setting up a winch on the side of the house along with some creative guide cables to raise it in to place.

As it’s on the inside corner of a wall I’ve used four TK brackets two on each wall boding it in using a mix of resin fixings and wall bolts.

The box section sits on top of an existing 40-50cm deep concrete slab that I can’t dig up to fit any sort of base support.

The Final Setup

And here it is ready to start testing. Some physical stops will be added once I’m happy everything is okay.

Follow-up and New Controller

After using the setup for a month including making a number of EME QSOs without many of the difficulties that plagued my previous setup, I was still having some problems.

The pulse counting seemed to be very quickly losing my position after lots of moon tracking movements during a session as if there was over/under counting going on, especially in elevation. I also had an instance of an unexpected jump in position that never repeated which worried me a bit. To potentially fix the potential pulse counting issue I considered a master/slave setup to reduce the cable length the pulses had to travel.

For the elevation side I also considered getting and using a HH12-Inc for an absolute readout and giving up on the pulses there but failed to acquire one. Additionally one of the relays gave out bringing forward my plan to use H bridges instead of relays. While these problems were in my mind and I was waiting on the H bridge bits arriving I discovered the Arduino itself was causing RFI (I’d assumed it was the PSU) around the IF of the 3cm moon beacon.

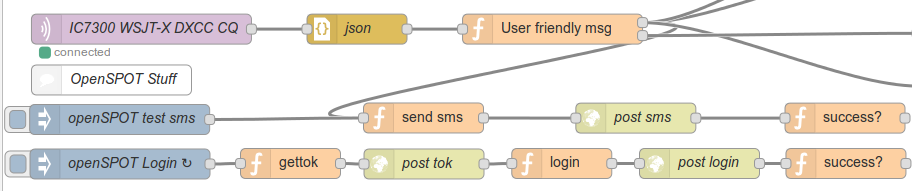

At this point wanting to just get reliably operational quickly I decided to shelve the controller, which I really do like, and I know works for others on 3cm EME so could be made to work better with some more time. I plan to use it with my VHF/UHF satellite setup in the future or for anything else requiring a versatile easily configured controller system.

The new controller system, WinTrak with two MABMPU boards and the controller software running on a touchscreen Pi has been working well for a few weeks with no obvious issues or adjustments needed over multiple sessions. This solution is pretty much plug and play for these drives so I don’t have much to say other than it works and I’d recommend it!

References

I wasn’t sure of the size of buttons to replace the originals with to allow them to be pressed when mounted in the case so I had also ordered a mixed pack on eBay to allow picking the appropriate size. I also ordered some white caps for the tops which would eventually be glued on. I eventually settled on the combination lush with the LCD.

I wasn’t sure of the size of buttons to replace the originals with to allow them to be pressed when mounted in the case so I had also ordered a mixed pack on eBay to allow picking the appropriate size. I also ordered some white caps for the tops which would eventually be glued on. I eventually settled on the combination lush with the LCD.